If you’ve spent any time on platform X lately, you’ve probably noticed a new trend called Vibe Coding.

It’s a fresh approach to software development where, instead of manually writing source code, the creator simply instructs artificial intelligence to implement ideas and solve problems. Rather than coding, the role of the developer shifts toward design, planning, formulating clear instructions, and evaluating quality.

Recently, the very first “Vibe Jam” took place in this new discipline—a hackathon/game jam focused on creating games using the Vibe Coding approach. Since game development was what got me into programming in the first place, I jumped at the opportunity to join. In this article, I want to share my recent experience from Vibe Jam as well as my personal perspective on the Vibe Coding phenomenon—who will benefit the most from it, what its strengths and weaknesses are, and whether it could realistically replace traditional programming.

The hottest new programming language is English

My Experience with Vibe Jam

The core gameplay—the physics-based car driving model—proved to be a challenge right from the start, not only for me but also for the AI itself. Language models may understand code, but they still struggle with grasping the physical world, spatial orientation, axes, and directions. Early development led to some hilarious moments:

- The wheels were rotated 90°, and when trying to turn, they spun around the wrong axis.

- By alternating gas and brake inputs, the car could be “rocked” and, if timed right when the car’s nose tilted upward, it could literally launch into space.

- The body of the car lagged behind the fast-spinning wheels; for some reason, the car’s chassis was about 3 times slower than the wheels (maybe it was a mass issue?).

- At slightly higher speeds, even a small turn would flip the car upside-down (this one took me ages to fix 😩).

I finally landed on a satisfying driving model and controls on my fourth attempt. That meant restarting the project from scratch—empty repo and all—four times. Still, to be fair to the language models, the fact that I eventually got a working result for such a complex task feels like a miracle. And once I overcame the hardest part, the rest of the development went much more smoothly (though there were still a few areas that required some extra patience).

What matters most, though, is that I genuinely enjoyed every step of the development—from implementing ideas to generating visuals, music, and sound effects. Thanks to AI tools, the pace of implementation was extremely rewarding, and the only thing that ever made me stop was the late-night hour.

In Case You’re Interested in the Technical Details

- The game runs directly in standard web browsers, entirely on the client side. Its core is built on the Three.js library, which handles rendering of 3D objects via JavaScript, and Cannon-es, which extends Three.js with a basic physics engine. Aside from these two, the project only uses Vite for building. The code follows a modular, object-oriented approach.

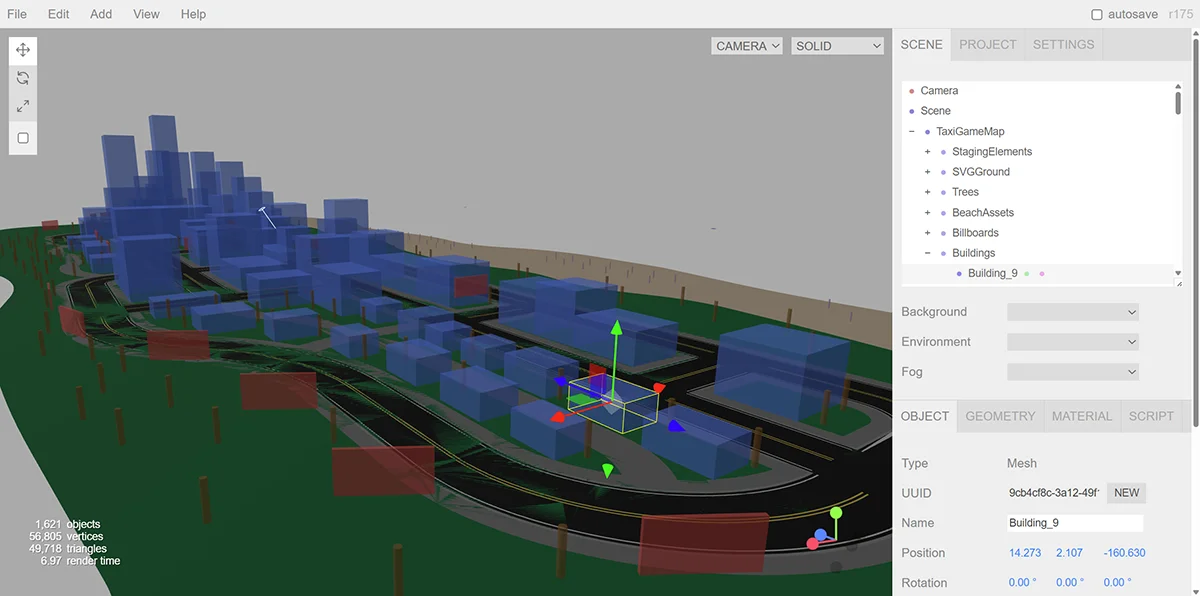

- The game world map was created manually. I started by designing a “blueprint” of the map in Adobe Illustrator, which I then imported into the game as an SVG file. Buildings and other game assets were placed using the Three.js online editor as primitive blocks (cuboids and cylinders). The game itself handled window placement on buildings and random texture assignment for billboards and other elements. However, I still had to spend a good amount of time working with AI to develop scripts that ensured two-way data conversion, since the game and the Three.js editor use different data representations. The map was iteratively refined and extended with new object types.

- For deployment and hosting, I used Netlify, which made the entire process extremely simple and completely free. However, I decided to stream the game’s music via an external service—SoundCloud—to avoid unnecessary high data transfer. The AI handled integration with this service flawlessly.

- I spent a total of 65 hours over roughly two weeks working on the game. The cost of the AI services I used during this period added up to €72. Still, I chose not to cut corners—I paid for access to the best models without limitations. That said, these days you can achieve quite a lot even with zero budget.

Biggest Surprises: Music, Sounds, and Billboards

Using AI tools for coding isn’t entirely new to me. Although I initially found early versions of GitHub Copilot more annoying than helpful, I’ve always proactively sought ways to incorporate AI into my workflow—especially during idea brainstorming, consulting optimal solutions, and learning new technologies.

But since the introduction of the so-called “AI-first” editor Cursor AI and the LLMs from Anthropic (currently Sonnet 3.7), it’s become clear to me that building weekend hobby projects has never been easier or faster. So this time, the biggest surprise for me came from other areas.

A Brief Summary of Innovations in Code Generation

- AI tools used in programming are no longer limited to merely auto-completing the current line or simple code blocks.

- Key innovations include in-editor chat capabilities, the ability for AI to edit multiple related files at once, easy browsing and approval of suggested changes, and convenient context referencing using the @ symbol. These tools also utilize IDE context: they can automatically find the files they need, fix linting warnings as they appear, run tests, and execute code independently—this is known as agentic behavior.

- A major accelerator in this space has been Cursor AI, a fork of VS Code built with the intention of embedding AI into the very core of the editor (“AI-first”). Shortly after, other similar editors emerged (like Windsurf, Trae, and others), and even GitHub Copilot has started evolving to catch up with this new wave of competition. Unfortunately, JetBrains environments are still lagging behind.

- Advances have also occurred on the level of the language models (LLMs) themselves. The accuracy and relevance of code suggestions have significantly improved thanks to a new generation of models like Anthropic Sonnet (currently version 3.7) and most recently Gemini 2.5 Pro. These models better understand context and more complex prompts, which reduces the need for manual developer intervention.

- A promising development is the gradual adoption of the MCP protocol, which has the potential to give AI tools access to entirely new datasets, services, and tools. In simplified terms, think of it as a unified API standard for AI agents, enabling them to request actions like generating graphic assets—or anything else imaginable—directly from the code editor.

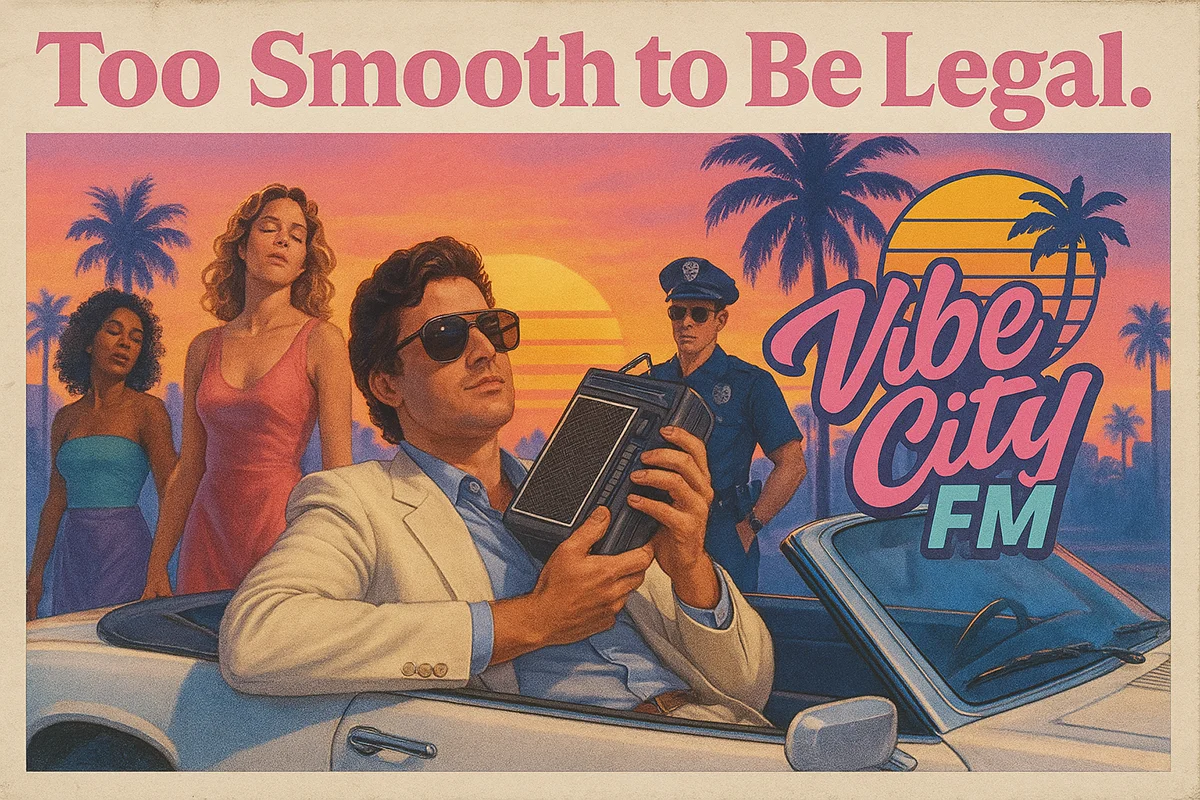

On the game map, I wanted to place billboards that humorously referenced 1980s stereotypes. Initially, I thought I’d use the Stable Diffusion model to generate background images for the billboards, then retouch them and add texts manually. But around halfway through the jam, an update for OpenAI’s GPT-4o image generation dropped (you might’ve caught the wave of the so-called “Ghibli” trend). That release completely swept my plan off the table. In the end, ChatGPT handled everything on its own—including coming up with billboard content ideas. And little did I know—the biggest surprise was still waiting for me.

That shock came when I started generating music. I’ve always seen music as the pinnacle of the audiovisual experience. In my world, there’s no such thing as a perfect movie without perfect music. And not even a perfect game without it. For me, music sometimes accounts for as much as 50% of whether a film or game truly succeeds in creating the right atmosphere. At the same time, I had come to terms with the idea (in theory—not that my ambitions were that high) that no matter what creative project I might pursue, I’d never achieve a truly perfect atmosphere without licensing tracks from Hans Zimmer or hiring world-class vocalists.

After this jam, I haven’t exactly changed that opinion—but I never expected that a single person, with no money and no musical skills, could even come close to achieving that kind of “perfect atmosphere” in terms of music. That has changed. After discovering the Suno AI music generation tool, I was absolutely blown away. I paused development on the game for several days just to focus on creating music. If you’re curious, you can listen to a small selection of the tracks I eventually included in the game here.

Lastly, my expectations were also exceeded when it came to creating sound effects, for which I used ElevenLabs. While some sounds required a bit of manual tweaking, sooner or later I was able to generate everything I needed. And thanks to AI code generation tools, integrating those sounds into the game was incredibly fast—even with advanced features (volume modulation based on distance, simulating acceleration and gear shifting on a single looping engine sound…) Just like music, sound effects have a huge impact on the atmosphere of a game. The fact that I was able to create and implement them so easily still feels almost unbelievable to me.

Back to Vibe Coding… So Should We All Just Code This Way Now?

My experience with “vibe coding” during this hackathon was fantastic, and I know that even people without any prior programming experience successfully participated. However, we’re still not at the point where non-developers can take over software department responsibilities on projects beyond weekend hobby prototypes.

The rise of Vibe Coding has brought with it some cautionary tales from excited “new programmers”—stories of unexpected API bills, victims of hacking attacks, people accidentally wiping real customer databases, or crashing their code entirely after a new update (often without even knowing what version control is).

Where Vibe Coding truly shines is in the development of so-called “throwaway” software. These are projects where quality and security requirements are minimal or nonexistent. In practice, that include prototypes, proofs of concept, small automation scripts, one-off software or extensions for individual users, hackathons—like the one where my game was created.

The video is sped up—the original length was 22 minutes. You can try out the final game from the video via this link.

Vibe Coding is especially fast and rewarding in the early stages of a project. However, without thoughtful planning and diligent code oversight, the growing project becomes less and less manageable over time (we’ve written about the topic of sustainable development before).

Language models tend to offer quick-fix solutions and, instead of solving root causes, sometimes just layer patch upon patch. (Although, to be fair, they’ve improved dramatically since their early days.)

That’s why there’s huge added value when experienced developers use these tools—developers who can keep up with the AI, point out flaws, and guide it toward better solutions. AI is willing to listen, but it needs someone beside it who understands the big picture, sees several steps ahead, and grasp the project’s goals and the user’s needs.

Working with AI tools therefore requires not just curiosity and creativity—but also caution and self-discipline (so developers don’t get lazy). Because what AI still doesn’t take over—at least not yet—is responsibility for the result. That part remains in our hands. For now. 😶

If you’d like to try out the game I worked on during Vibe Jam, you can do so at this link.

And if you’re thinking about launching a similar project yourself—whether it’s a game or something else—go for it! It’s never been easier. We’d love to hear from you, so feel free to share your results, thoughts, or questions with us on social media!

Was this article written by artificial intelligence?

Tomáš Bencko

The author is a frontend developer specializing in React, Vue.js, and TypeScript. He develops modern, scalable frontend solutions while balancing development with the finer points of design. Outside of client work, he’s constantly seeking ways to improve team workflows, experimenting with AI and automation, and bringing fresh ideas to advance projects and inspire colleagues.